Every camera has a sensor to capture light. The light captured on the sensor is what forms the image.

What is a camera sensor?

A camera sensor is made up of millions of tiny light-collecting dots called pixels. Each pixel records the brightness (and colour) of the light that falls on that specific point of the sensor during an exposure.

If your camera has a 24-megapixel sensor like me an my Nikon Z6iii, it contains roughly 24,000,000 of these individual light-collecting spots arranged in a precise grid. Each one contributes a small piece of information.

When all of those pixels are viewed together, their combined data forms the complete image you see – from broad shapes and tones down to fine textures and edges. The more pixels there are, the more finely theimage can describe detail, assuming everything else in the chain is up to the task.

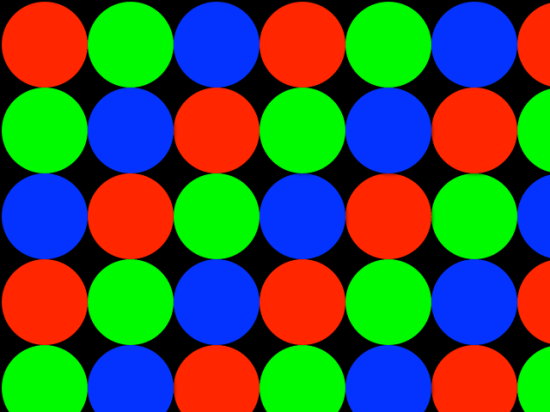

Below is a simplified, highly magnified illustration of what those light-collecting dots look like on a sensor. In reality, they’re far smaller and packed much more tightly, but this gives a useful mental model for how an image is built up pixel by pixel.

Because a camera sensor has millions of pixels it is able to capture lots of detail and colour, like this photo of the London skyline that I shot at night:

However, if the sensor only had a few hundred pixels it would create a very low resolution image like this:

![]()

Sensor resolution and image detail.

More pixels mean higher resolution, which allows an image to hold more detail. Sensor resolution is therefore one factor that affects how clear and sharp a photograph appears, particularly when images are viewed large or cropped heavily. However, resolution on its own doesn’t guarantee sharpness: lens quality, focus accuracy, camera stability, and processing all play a significant role in how much usable detail you actually see in the final image.